liblab llama SDK challenge

This tutorial includes the following SDK languages and versions:

TypeScript v1 TypeScript v2 Java Python v1 Python v2 C# Go PHP ✅ ❌ ❌ ✅ ❌ ❌ ❌ ❌

Can you build an SDK to lead your llama back to its pen, collecting berries on the way?

The llama SDK challenge is a fun way to learn how to build an SDK with liblab. You'll start with a game with an API to control the llama in a maze, and you'll build an SDK to control the llama. Your goal is to use this SDK to get the llama back to its pen, collecting berries on the way.

This tutorial covers using TypeScript or Python. You can choose which language you want to use, or use one of the other languages supported by liblab, but there won't be guides or code snippets for those in this tutorial.

Prerequisites

To complete this tutorial, you'll need:

- A liblab account

- The liblab CLI installed and you are logged in

- Docker

- Visual Studio Code

- VS Code Dev Containers extension

All the components will run in dev containers in VS Code. This means you don't need to install anything on your machine besides Docker and VS Code, and all the configuration will happen inside the dev containers.

This tutorial also assumes you have some basic knowledge of TypeScript or Python, and are able to clone a git repository and run a command in a terminal.

Steps

In this tutorial you'll:

Get the code

You can find the llama SDK challenge code on GitHub at github.com/liblaber/llama-game-demo.

- Make sure Docker is installed and running on your machine.

- Clone this repo: github.com/liblaber/llama-game-demo.

- Open the repo in VS Code. You'll see a prompt to reopen the folder in a dev container. Click Reopen in Container.

The repo will be opened in a container, with all the necessary dependencies installed inside that container so that you can run the game. Nothing will be installed on your local machine.

This code has a few components:

frontend- This is the frontend of the game. This is a Vite.js app that uses Phaser.js to render the game. This frontend is compiled and made available by the API.api- This is a Python FastAPI app that hosts the game, as well as providing an API to control the llama in the game.

The api is used to control the llama in the game. It uses a websocket to send commands to the frontend. The frontend then renders the llama moving around the map. You won't need to change any of the game code, just run both components so that you can generate an SDK, and control the game.

When the container is created, all the necessary dependencies will be installed, such as Python and npm, and the game front end will be built and copied into the API project. This may take a few minutes.

Run the game

The game is configured to run from the dev container. You can run it by running the following command in the terminal inside VS Code.

You will need to run this in the terminal to ensure you are running this inside the dev container.

./scripts/start-game.sh

This will start both the front end and the API. VS Code will forward the ports from the dev container so that you can access the game in your browser as if it was running locally, not in a container.

vscode ➜ /workspaces/llama_game_demo (main) $ ./scripts/start-game.sh

INFO: Will watch for changes in these directories: ['/workspaces/llama_game_demo/api']

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

INFO: Started reloader process [16702] using WatchFiles

INFO: Started server process [16704]

INFO: Waiting for application startup.

INFO: Application startup complete.

View the game

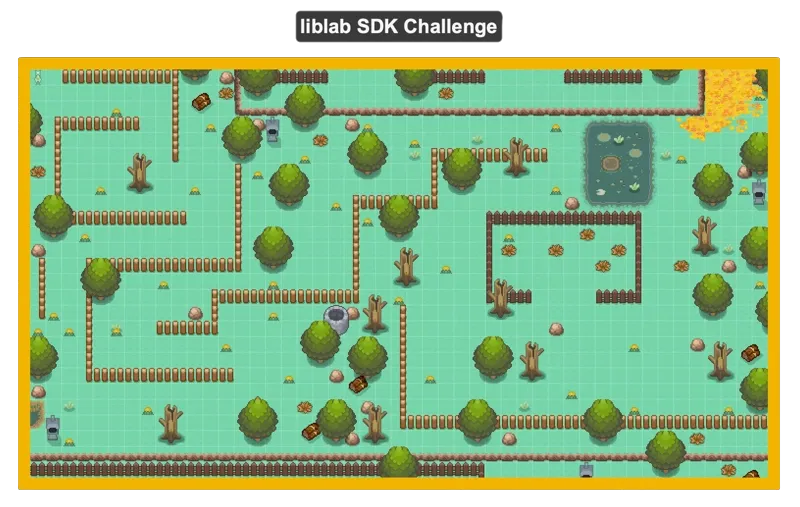

To see the game running, head to localhost:8000. You should see a llama in a maze.

The maze is divided into squares, each one is a single step for the llama. The map has berries you can collect, and obstacles you have to avoid such as trees and fences.

A berry. Llamas like to eat these.

A fence. This is dangerous to llamas.

This game can only be controlled using the API. You can't control the llama directly.

Verify the API is running

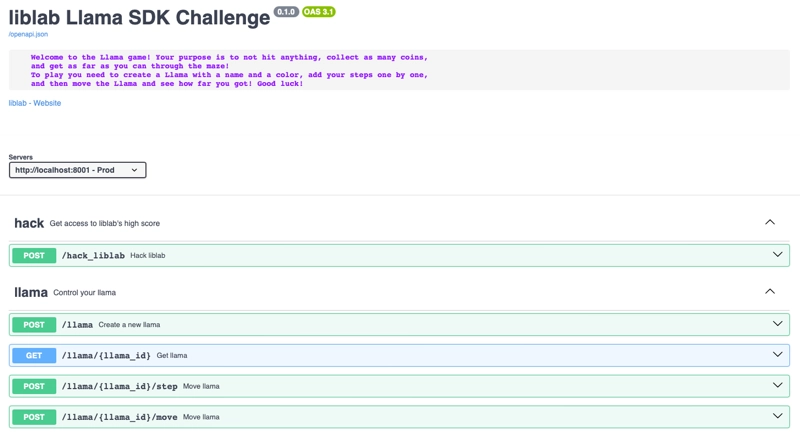

To verify the API is running, head to localhost:8000/docs. You should see the API docs.

Explore the game API

You can use the API docs at localhost:8000/docs to explore the API.

The flow for controlling the llama is:

- Create a llama

- Define a set of steps for the llama to take

- Move the llama, following the steps you defined

The API endpoint you want to interact with is the llama endpoint. This has a number of operations that you can use to control the llama:

-

POST /llama- This will create a new llama. You can only have one llama at a time, and it is this llama that you'll need to move around the map. This request takes the following body:{

"color": "black",

"name": "Libby"

}The color can be one of a set of colors, and these are defined in the

LlamaColorschema. The name can be any string.This call will return a new llama with the following fields:

{

"color": "black",

"curr_coordinates": [

0,

5

],

"llama_id": 123,

"name": "Libby",

"score": 12,

"start_coordinates": [

0,

0

],

"status": "alive",

"steps_list": [

]

}The most important field is the

llama_id. This is the ID of the llama that you'll need to use to move the llama around the map. -

POST /llama/{llama_id}/step- This will add a new step for the llama to take once you start it moving. This takes the following body:{

"llama_id": 123,

"direction": "up",

"steps": 1

}The

llama_idis the ID of the llama you want to move. Thedirectionis the direction you want the llama to move in. This can be one ofup,down,left, orright, and is defined in theDirectionschema. Thestepsis the number of steps you want the llama to take in that direction. On the game map, each square is one step. -

POST /llama/{llama_id}/move- This will start the llama moving. This takes the following body:{

"llama_id": 123

}The

llama_idis the ID of the llama you want to move. This returns the following response:{

"llama_id": 123,

"position": [

0,

4

],

"score": 5,

"status": "alive"

}The

positionis the new position of the llama. Thescoreis the number of berries the llama has collected. Thestatusis the status of the llama, from theLlamaStatusschema. This can bealiveordead.alivemeans the llama is still alive, anddeadmeans the llama has hit an obstacle and died 🪦.

Create a new SDK

Rather than call the API directly, you'll create an SDK to call the API for you. This will make it easier to control the llama, taking advantage of features like type checking and auto-completion, something you don't get when calling an API directly.

The game API has an OpenAPI spec available at localhost:8000/openapi.json that you can use to generate an SDK.

Create the liblab config file

To generate an SDK, you'll need to create a liblab config file. This tells liblab how to generate the SDK. You can create this file by running the following command in your terminal:

liblab init --spec http://localhost:8000/openapi.json

This will create a file called liblab.config.json in your current folder, pointing to the OpenAPI spec file for the llama game API. Open this file in VS Code.

Configure the SDK

Once you have the config file, you'll need to configure it to generate an SDK for the llama game API. It will be created with multiple options already set, so replace the contents of the file with the following, depending on which SDK language you are planning to use, TypeScript or Python:

- TypeScript

- Python v1

{

"sdkName": "llama-game",

"specFilePath": "http://localhost:8000/openapi.json",

"baseUrl": "http://host.docker.internal:8000",

"languages": [

"typescript"

],

"customizations": {

"devContainer": true

}

}

{

"sdkName": "llama-game",

"specFilePath": "http://localhost:8000/openapi.json",

"baseUrl": "http://host.docker.internal:8000",

"languages": [

"python"

],

"customizations": {

"devContainer": true

}

}

These options are:

| Option | Description |

|---|---|

sdkName | This is the name of the SDK, llama-game. |

specFilePath | This is the path to the OpenAPI spec file from the running API. |

baseUrl | This is the base URL of the running API. This is set to http://host.docker.internal:8000 so that you can use the SDK from one dev container and access the API from another dev container. |

languages | This is the list of languages you want to generate an SDK for, either typescript or python. |

customizations/devContainer | This is where you can customize the SDK. In this case, you'll use this to tell liblab to create a VS Code dev container for the SDK. |

Generate the SDK

To generate the SDK, run the following command in your terminal:

liblab build

This will generate the SDK for the language you specified, and download this into the output folder.

vscode ➜ /workspaces/llama_game_demo (main) $ liblab build

Your SDKs are being generated. Meanwhile how about checking out our

Dashboard: https://app.liblab.com

It contains additional information about your request.

✓ Python built

✓ Doc built

Successfully generated SDKs downloaded. You can find them inside the "output" folder

Successfully generated SDK for python ♡

It will also generate documentation for the SDK, and provide you with a link to download it, and an option to publish it.

Action Link

──────── ──────────────────────────────────────────────────────────────────────────────────────────────────────────────

Preview https://docs.liblab.com/liblab/preview/7d86c5df-d7e6-4414-812e-904ec8bdf841

Download https://prod-liblab-api-stack-docs.s3.us-east-1.amazonaws.com/7d86c5df-d7e6-4414-812e-904ec8bdf841/bundle.zip

? Would you like to approve and publish these docs now? (Y/n)

Use the SDK to move the llama

Once the SDK has been built, you can use it to control the llama. The SDK will be in the output folder, in a sub folder by language. For example, if you generated a TypeScript SDK, it will be in output/typescript.

Open the SDK language folder in VS Code. You'll see a prompt to reopen the folder in a dev container. Click Reopen in Container. The container will be built, installing the SDK ready for you to use.

Check out the sample

Every SDK has an example, in the examples folder. The example that is created will use the first GET endpoint from the API spec, in this case it will try to get a llama (and fail as you haven't created one yet):

- TypeScript

- Python v1

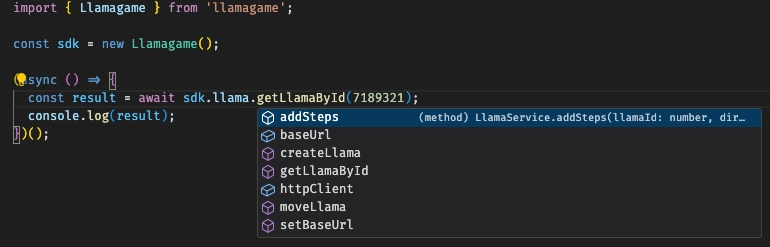

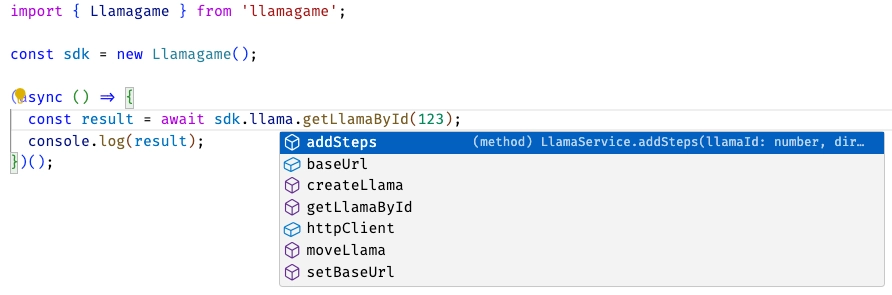

The TypeScript sample is in the examples folder, with the code in the src/index.ts file, along with all the boilerplate configuration files to run the sample using npm. As part of the dev container creation the relevant npm packages are installed, and the SDK is compiled.

import { Llamagame } from 'llamagame';

const sdk = new Llamagame();

(async () => {

const result = await sdk.llama.getLlamaById(123);

console.log(result);

})();

To run this example, navigate to the examples folder and run the sample using npm:

cd examples

npm run start

You will get an error from the API - there are no llamas defined in the game yet, so accessing llama ID 123 will fail.

BadRequest [Error]

at new BaseHTTPError (/workspaces/llama_game_demo/output/typescript/dist/commonjs/http/errors/base.js:24:22)

at new BadRequest (/workspaces/llama_game_demo/output/typescript/dist/commonjs/http/errors/BadRequest.js:6:9)

at throwHttpError (/workspaces/llama_game_demo/output/typescript/dist/commonjs/http/httpExceptions.js:69:25)

at HTTPLibrary.handleResponse (/workspaces/llama_game_demo/output/typescript/dist/commonjs/http/HTTPLibrary.js:94:42)

at HTTPLibrary.get (/workspaces/llama_game_demo/output/typescript/dist/commonjs/http/HTTPLibrary.js:29:28)

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at async LlamaService.getLlamaById (/workspaces/llama_game_demo/output/typescript/dist/commonjs/services/llama/Llama.js:40:26) {

title: 'Bad Request',

statusCode: 400,

detail: { detail: 'Provide a valid ID for a llama' }

}

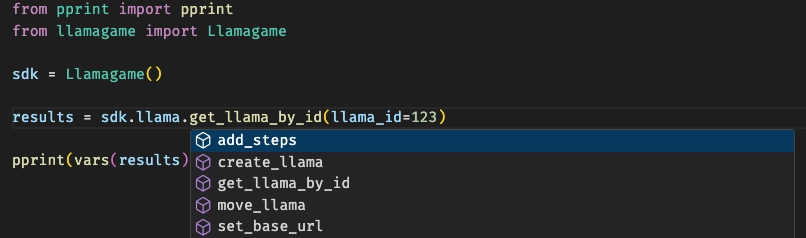

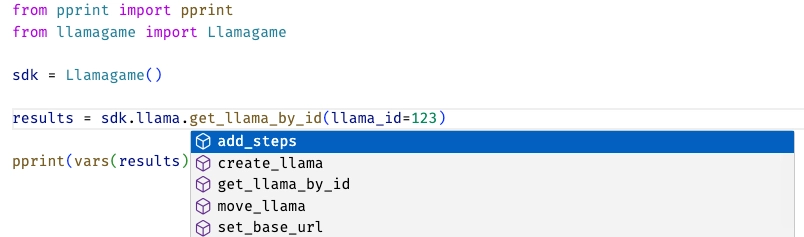

The default sample is in the examples/sample.py file. As part of the dev container creation the relevant pip packages are installed, and the SDK is compiled into a wheel and installed into the local environment.

from os import getenv

from pprint import pprint

from llamagame import Llamagame

sdk = Llamagame()

results = sdk.llama.get_llama_by_id(llama_id=123)

pprint(vars(results))

To run this example, navigate to the examples folder and run the sample.py file:

cd examples

python sample.py

You will get an error from the API - there are no llamas defined in the game yet, so accessing llama ID 123 will fail.

http_exceptions.client_exceptions.BadRequestException: BadRequestException(status_code=400, message='{"detail":"Provide a valid ID for a llama"}')

Move the llama

Your task now is to move the llama, trying to get it into the pen and collect as many berries as you can along the way using the SDK. The steps to take are:

- Create a llama, and get its ID

- Define a set of steps for the llama to take to get to the pen and collect berries

- Move the llama, following the steps you defined

- Report the status of the llama

Edit the code in the example folder, and run it as described above.

Some hints:

-

When you created the SDK, you created docs. These may help.

-

One of the many advantages of using an SDK is that you get type checking and auto-completion. Use this to your advantage. For example, you can explore the functionality of the SDK by tapping

.!- TypeScript

- Python v1

-

The SDK methods should be sensibly named!

If you want more help, here's some sample code to get you started (this won't get the llama to the pen but will get you started):

Expand to see some sample code to get you started

- TypeScript

- Python v1

// An example of programming against the llama SDK challenge game in TypeScript

import { Llamagame, LlamaInput } from 'llamagame';

// Create the SDK client

const llamaGameClient = new Llamagame();

(async () => {

// Create a llama - for this we need a llama input

// In this example, use libby the liblab llama

const llamaInput: LlamaInput = {

name: 'libby the llama',

color: 'white',

};

// Create the llama

const llama = await llamaGameClient.llama.createLlama(llamaInput);

console.log(`Created llama ${llama.llama_id}`);

// Build a list of moves for the llama

// Down 1

// Right 5

// Down 1

// This will get a berry

// Down 1

await llamaGameClient.llama.addSteps(llama.llama_id, 'down', 1);

// Right 5

await llamaGameClient.llama.addSteps(llama.llama_id, 'right', 5);

// Down 1

await llamaGameClient.llama.addSteps(llama.llama_id, 'down', 1);

// Now we have the steps, run the moves and print the result

const result = await llamaGameClient.llama.moveLlama(llama.llama_id);

console.log(`Llama ${llama.name} is ${result.status}`);

console.log(`Score: ${result.score}`);

})();

"""

An example of programming against the llama SDK challenge game in Python

"""

from llamagame import Llamagame

from llamagame.models import Direction, LlamaColor, LlamaInput

# Create the SDK client

lama_game_client = Llamagame()

# Create a llama - for this we need a llama input

# In this example, use libby the liblab llama

llama_input = LlamaInput(

name="libby the llama",

color=LlamaColor.WHITE,

)

# Create the llama

llama = lama_game_client.llama.create_llama(llama_input)

print(f"Create llama {llama.llama_id}")

# Build a list of moves for the llama

# Down 1

# Right 5

# Down 1

# This will get a berry

# Down 1

lama_game_client.llama.add_steps(

steps=1, direction=Direction.DOWN, llama_id=llama.llama_id

)

# Right 5

lama_game_client.llama.add_steps(

steps=5, direction=Direction.RIGHT, llama_id=llama.llama_id

)

# Down 1

lama_game_client.llama.add_steps(

steps=1, direction=Direction.DOWN, llama_id=llama.llama_id

)

# Now we have the steps, run the moves and print the result

result = lama_game_client.llama.move_llama(llama_id=llama.llama_id)

print(f"Llama {llama.name} is {result.status}")

print(f"Score: {result.score}")

Good luck, and get that llama to the pen! 🦙